Introduction to CDC

According as to its definition and to its aims, a Data Warehouse (DWH) must maintain the history of business measures' changes. Thus the ETL processes of the Data Warehouse loading must be able to detect the data changes which have occurred in source operation systems during the business operations.

The ability to detect the changed data in source systems and capture these changes is called Change Data Capture (CDC).

All stages of Data Warehouse loading processes are usual difficult, but, as a rule, stage of handling Change Data Capture is the most difficult and challenged task. Although the aim of this operation seems simple: just detect the data that have been changed since the last load, implementation of CDC can cause many problems and questions. In this article, I try to define and describe the different approaches to realize CDC.

There are theoretically several possible techniques and technologies to handle the change data capture processes (CDC process):

- full comparing source and target;

- comparing source and target by using CRC;

- using transaction log files;

- using commercial change data capture applications;

- using DBMS's CDC-feature;

- using triggers;

- using DBMS replication;

- using an indicator of update on source records.

All of these methods have advantages as well as disadvantages.

Influence of source on CDC

Before considering different types of CDCs and their pros and cons, let us review how data can be changed in the source system and what types of changes can occur, because these things influence on some implementation of Change Data Capture processes.

The records of operational source system can be changed by users through using a variety of business applications (this is a standard way), by batch update events, or by database administrators through direct manual changing of a field's value. Notice, sometimes source system data are changed without using business software, and CDC processes must capture all as changes made through standard methods and changes made through non-standard methods.

Three following types of changes which Change Data Capture processes must detect occur in the source system: inserting new records, updating one or more fields of existing records, deleting records.

For better understanding what CDC processes should do and why source system occurrences impact these processes, let us take an example of almost practical case.

Example of CDC and data loading

Assume that we have following requirements for ETL processes that detect changes of data source and populate the DWH tables.

- All DWH tables shall have the special field that is called "Status_Code". The field shall contain value "I" for records that represent records inserted in the source table, contain value "U" for records that represent records updated in the source table, and contain value "D" for records that was deleted from the source table.

- All DWH tables shall have the special field that is called "Date_Begin". The field shall contain the beginning date of actuality of this record.

- All DWH tables shall have the special field that is called "Date_End". The field shall contain the expiration date of this record's actuality or nothing if record still contains actual information.

- ETL processes should detect all source records that have been added, changed, and deleted since last loading data in DWH.

- If a source record that has been added since last loading data in DWH is detected, ETL process shall insert new record in DWH table, populate all necessary DWH fields with counterpart source fields' values, assign value "I" to Status_Code field, and assign current date to Date_Begin field.

- If it is detected that a source record has been deleted since last loading data in DWH, ETL process shall find the DWH record that represent deleted record, assign value "D" to Status_Code field, and assign current date to Date_End field.

- If a source record that has been edited since last loading data in DWH is detected, ETL process shall find the DWH record that represent this edited record, assign current date to Date_End field. After that ETL process shall insert new record in DWH table, populate all necessary DWH fields with counterpart source fields' values, assign value "U" to Status_Code field, and assign current date to Date_Begin field.

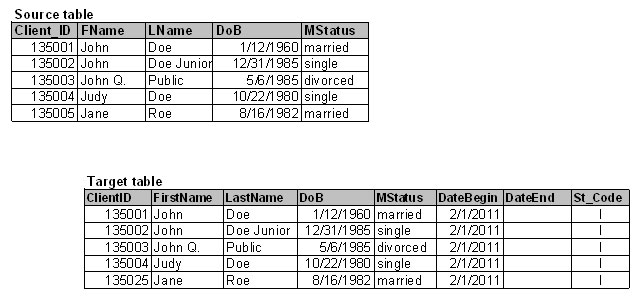

For example, imagine that there are two tables: Source table and Target table. Every day ETL processes load data to the Target table. First load (Initial Load) occurred at February 1st and populated Target table as shown in Figure 1.

Figure 1. Initial Load

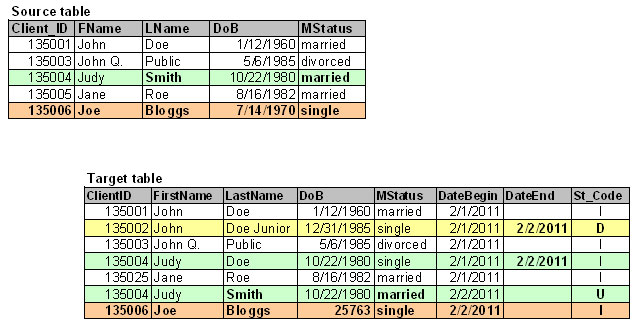

After that, changes happened in the World and in the Source table. John Doe Junior ceased to be our client, and record about him was deleted. Judy Doe got married and changed her last name. Also, new client came to the company. Figure 2 shows how these changes must be represented in the Target table after incremental load.

Figure 2. Incremental Load

Copy of previous data

Discussing some of the approaches, we mention "copy of previous data" or "previous copy of the table". This means the copy of the source table that has been stored in the staging area or, in other words, in the "back room" area since the time of the previous load. How this works? The copy of the source table is usually created in the staging area even for firs load. When the process of initial load is complete, this copy is not deleted but preserved with name "previous copy of the table". During the next load, this preserved copy of previous data is used for change data capture needs. Since the Change Data Capture process is complete, it drops the "previous copy of the table", renames the current copy of the source table to "previous copy of the table".

You may ask why we do not use the data warehouse table for CDC's needs, because it contains all necessary previous data. It is possible of course, usually in so simple case like our example, but, in real DWH project, it is more complicated and unproductive than to use copy of the source table in the staging area.

Full comparing source and target

In simple words, this method of CDC compares two snapshots of source data. The ETL process is forced to download the complete source table and compare it record by record and field by field with the previous copy of the processed table, so the previous extract from the source system must be remained in the staging area of ETL system since last loading. Thus a snapshot of a source table that is taken at the moment of extraction are compared in consecutive order, record by record and field by field, with the previous snapshot of a source table that was taken at the moment of last extraction.

In our example case, speaking a pseudo SQL, the condition for search of updated records might look like the following line:

WHERE current.Client_ID = prev.Client_ID AND (current.FName <> prev.FName OR current.LName <> prev.LName OR current.DoB <> prev.DoB OR current.MStatus <> prev. MStatus)

Two additional steps are necessary to find records that were inserted and deleted.

The condition for search of inserted records might look like the following line:

WHERE current.Client_ID NOT IN (SELECT Client_ID FROM prev)

The condition for search of deleted records might look like the following line:

WHERE prev.Client_ID NOT IN (SELECT Client_ID FROM current)

The Pros and Cons of the Full Compare Method

The disadvantage of this Change Data Capture approach is very resource-intensive implementation of this technique. Although this approach is probably the easier for realization, the resulted processes are unwieldy and require an excessive amount of time and resources in running. You can increase speed of processing and reduce resource consumption by using the CRC (cyclic redundancy checksum) method.

The advantage is that this technique guarantees to find all changes of source that has been made since last load.

CRC-code

This technique of record comparisons uses the cyclic redundancy checksum (CRC) algorithms to capture changed data. CRC (cyclic redundancy checksum) code is a long integer value that is absolute sensitive to the information that is used for calculation this code.

In this case, the data ETL process also as the full compare method downloads the complete current copy of the source table and compares its record by record with the previous copy of the processed table. Whereas the full compare approach compares each record field by field, this technique compares just two values of CRC function in each pair of records.

The CRC-code is computed for each record and stored as additional field in the copy of previously loaded data. The input parameter of the cyclic redundancy checksum function, in our case, is a concatenation of all record's fields whose changes we want to track. When the current load occurs, the ETL process calculates current CRC-code and compares it with the previous value of CRC of the same record. This operation is performed on each record. If even one character or figure in a table record has been changed between two loads, the cyclic redundancy checksum codes for that record would be different.

The condition for search of updated records might look like the following line written in pseudo SQL:

WHERE current.Client_ID = prev.Client_ID AND CRC_Function(current.FName + current.LName + current.DoB + current.MStatus) <> prev.CRC_Field

The Pros and Cons of Comparing by Using CRC

The disadvantage of this CDC approach is that, in common with the full compare method, this method requires two additional steps to find records that were inserted and deleted.

The advantage is that this technique allows recognize changed data more efficient than the field by field compare method does.

Delta file

Very rarely, operational applications produce a "delta" file. This file contains all data changes that were made by using this application during business operational activity. If there is the delta file, the Change Data Capture process becomes very easy and efficient.

The main problem is that no many applications generate delta file. The second serious problem is that if delta file is generated by application code instead of using a database trigger mechanism, it loses the changes made directly in a database without using this application.

Transaction log file

Most database management systems usually have a transaction log that records all database changes and modifications made by each transaction. Scanning and analyzing the contents of the database transaction log, it is possible to capture changes made to the database.

Actually there are two possible approaches to use a transaction log to implement CDC. First one is periodical scraping of a log file at scheduled basis. Second one is a permanent sniffing a log file for capturing transaction on real time basis.

The Pros and Cons of Using Transaction Logs to CDC

The advantage of using Transaction Logs to Change Data Capture is that the process of transaction log analyzing does not affect the operational transactional database.

There are a few disadvantages and difficulties. If a transaction log is used for capture changes, it is necessary to coordinate well with operational data system administrators who can periodically truncate log file for performance goals or do somewhat else that can affect ETL processes. In additional, it depends on the transaction log setup options, sometimes transaction log does not record information about changes made in batch mode.

Main difficulty with using a log files is that their internal structures usually are not easy intelligible. Also, data management systems of different vendors vary in the log file formats. Many efforts can be needed to develop an application that analyzes the log file, yet these efforts do not guarantee that the application would work correctly after installing patches or updates of the database management engine. Because of so tough efforts, it makes sense to choose an available Change Data Capture application in the market as a solution rather than develop it from scratch.

Commercial Change Data Capture Applications

Some third-party software vendors provide the Change Data Capture applications. These software tools usually read database log files to detect data changes.

The Pros and Cons of Using Commercial CDC Applications

CDC applications provide wide Change Data Capture functionality and compatibility with a variety of types and versions of data sources.

There are some disadvantages. The cost of new CDC application can be substantial by itself, and the additional software can cause a necessary to hire new employees or teach current staff to use this tool that mean additional costs. Also, although CDC applications are compatibility with many versions and types of database management systems, exactly your version can be unsupported or has functionality limit. Moreover, this is the big secret, as anything else CDC applications can contain some bugs.

Trigger method

One of seemed like the best techniques for CDC is adding triggers to tables whose changes should be controlled. In this approach, triggers are turned on when an operation of insert, update or delete happens and record the information about the changes to specific tables or files.

Why does this CDC trigger method just seem like the best one?

It looks like an ideal, but matters of performance, an organization policy or operational data owners can be the insurmountable obstacles to implement additional triggers.

RDBMS's CDC-feature

Some database management systems vendors provide the CDC functionality embedded in newest versions of their products.

The Pros and Cons of Using DBMS's CDC-feature

Good news is that DBMS's CDC-feature provides a ready-for-using mechanism of capturing changed data.

The problem is that just some few vendors provide this option for a limited amount of certain versions of database management system. If you have heterogeneous source systems or inappropriate out of date version, this method probably cannot help you resolve the problem of capturing changed data.

RDBMS built-in replication

Replication is the process of automatically distributing copies of data between few of database servers, and keeping the distributed information synchronized. If RDBMS of your source system supports replication, you can also use this mechanism to replicate data to a data warehouse.

There are few disadvantages. Replication is not easy to install and to setup by itself, and replication is not exactly the change data capture mechanism, so this requires additional efforts to use replication to the CDC needs. Also, because of incompatibility replication might be not so good solution in case of heterogeneous source systems..

Timestamps, audit column, or some other indicator of update

It seems like a silver bullet to select records from the source table where value of a special field equal the specified date and reach all changes occurred on this specified day, but do not hurry to say: "Hurrah!" when you discover a field with a name that looks like Modified_Date, Timestamp, or AuditColumn in the source table. Unfortunately, the World is not ideal, and often such fields just pretend to store the date and time a record was added or modified.

Why does this happen? Sometimes, because of performance reasons, the indicator-of-updating field is updated by front end applications instead of database triggers, so this field does not react to changes made by batch update events or database administrators. In other cases, just date of record's creation is registered, and date of changing is not. Also, there can be some bugs in the code of the application or the database trigger.

When you find a field that, how you think, stores information about the date and time of record's changes, you must analyze clearly it does that precisely and always. If you find a NULL values or discover that value of this indicator-of-updating field is not changed after some transactions, you have to use a different way for detecting changes.

Although we are sure the indicator-of-updating field works well, it makes sense to periodical control this field and compare key totals between the source system and the data warehouse. Moreover, this approach also requires additional activities to detect deleted records.

In conclusion

This article is only brief overview of the most feasible CDC methods and surrounding issues. Although the goal of change data capture is clear and ways to develop CDC seem understandably, real processes of its realization can have a lot of stumbling blocks. There can be several approaches applied to CDC simultaneously in the same project. A choice of appropriate approaches to Change Data Capture is not trivial and requires thorough research and profound analysis of source systems and operational software, business processes, IT infrastructure, and requirements for decision support system.